Antithetic-variates technique

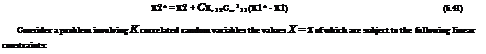

The antithetic-variates technique (Hammersley and Morton, 1956) achieves the variance-reduction goal by attempting to generate random variates that would induce a negative correlation for the quantity of interest between separate simulation runs. Consider that Gq and ©2 are two unbiased estimators of an unknown quantity в to be estimated. The two estimators can be combined together to form another estimator as

![]() 1

1

G a = 2(®1 + ®2)

The new estimator Ga also is unbiased and has a variance as

Var(©a) = 1 [Var(©1) + Var(©2) + 2Cov(©b ©2)] 4

If the two estimators ©1 and ©2 were computed by Monte Carlo simulation through generating two independent, sets of random variates, they would be independent, and the variance for ©a would be

Var(© a) = 1[Var(©i) + Var©)] (6.77)

4

From Eq. (6.76) one realizes that the variance associated with ©a could be reduced if the Monte Carlo simulation can generate random variates, which result in a strong negative correlation between ©1 and ©2.

In a Monte Carlo simulation, the values of estimators ©1 and ©2 are functions of the generated random variates, which, in turn, are related to the standard uniform random variates. Therefore, ©1 and ©2 are functions of the two standard uniform random variables U1 and U2. The objective to produce negative Cov[©©1(U1), ©2(U2)] can be achieved by producing U1 and U2, which are negatively correlated. However, it would not be desirable to complicate the computational procedure by generating two sets of uniform random variates subject to the constraint of being negatively correlated. One simple approach to generate negatively correlated uniform random variates with minimal computation is to let U1 = 1 — U2. It can be shown that Cov(U, 1-U) = -1/12 (see Problem 6.31). Hence a simple antithetic-variates algorithm is the following:

1. Generate ui from U(0, 1), and compute 1 – ui, for i = 1, 2,…, n.

2. Compute 91(ui), 02(1 – ui), and then Qa according to Eq. (6.75).

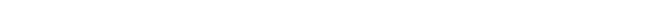

Example 6.10 Develop a Monte Carlo algorithm using the antithetic-variates technique to evaluate the integral G defined by

b

![]() g (x) dx

g (x) dx

in which g (x) is a given function.

Solution Applying the Monte Carlo method to estimate the value of G, the preceding integral can be rewritten as

|

|

||

|

|||

|

|

||

|

|||

where fx(x) is the adopted distribution function based on which random variates are generated. As can be seen, the original integral becomes the calculation of the expectation of the ratio of g (X) and fx(X). Hence the two estimators for G using the antithetic-variates technique can be formulated as

![]() G = ьА g( X 1i)

G = ьА g( X 1i)

1 n^fx ( X 1i)

i=1

G = 1 V g(X2i)

2 n f x (X2i)

i = 1

in which Xa = F-l(Ui) and X2i = F—1(1 — Ui), with Fx(■) being the CDF of the random variable X. The algorithm for the Monte Carlo integral using the antithetic – variates technique is

1. Generate n uniform random variates Ui from U(0, 1), and compute the corresponding 1 — Ui.

2. Compute g(xu), fx(X1i), g(X2i), and fx(X2i), with xu = F—1(ui) and X2i =

F—l(1 — ui).

3. Calculate the values of G1 and G2 by Eqs. (6.78a) and (6.78b), respectively. Then estimate G by Ga = (G1 + G2V2.

In the case that X has a uniform distribution as fx(x) = 1/(b — a), a < x < b, the estimate of G by the antithetic-variates technique can be expressed as

b — n

ga = ^na ^2g(x1i) + g(x2i)] (6.79)

i=1

Rubinstein (1981) showed that the antithetic-variates estimator, in fact, is more efficient if g (x) is a continuous monotonically increasing or decreasing function with continuous first derivatives.

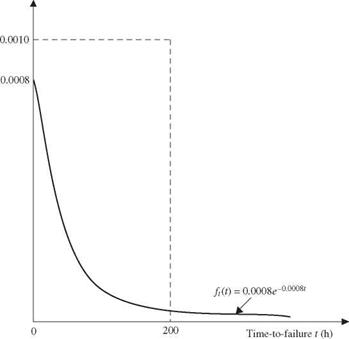

Example 6.11 Referring to pump reliability Example 6.6, estimate the pump failure probability using the antithetic-variates technique along with the sample-mean Monte Carlo algorithm with n = 1000. The PDF selected is a uniform distribution U(0, 200). Also, compare the results with those obtained in Examples 6.6, 6.7, and 6.8.

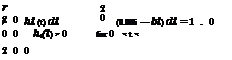

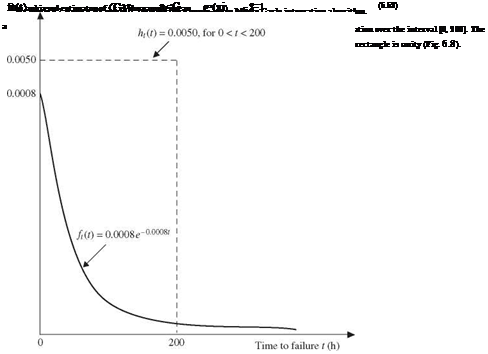

Solution Referring to Example 6.7, uniform distribution U(0, 200) has a height of 0.005 (see Fig. 6.8). The antithetic-variate method along with the sample-mean Monte Carlo algorithm for evaluating the pump failure probability can be outlined as follows:

1. Generate n pairs standard uniform random variates (ui,1 — Ui) from U(0, 1).

2. Let tn = 200 Ui and t^i = 200(1 — Ui). Compute ft(tn) and ft(t2i).

3. Estimate the pump failure probability, according to Eq. (6.79), as

Pf, a = 200 £[ ft (hi) + ft (t2i)]

i=1

Using this algorithm, the estimated pump failure probability is pf = 0.14785. Comparing with the exact failure probability pf = 0.147856, the estimated failure probability by the antithetic-variates algorithm with n = 1000 and the simple uniform distribution is accurate within 0.00406 percent. The standard deviation s associated with the 2n random samples is 0.00669. According to Eq. (6.74), the standard error associated with pif a can be computed as s/V2n = 0.00015. The skewness coefficient from the 2n random samples is 0.077, which is close to zero. Hence, by the normality approximation, the 95 percent confidence interval containing the exact failure probability pf is (0.14756, 0.14814).

Comparing the solutions with those of Examples 6.6, 6.7, and 6.9, it is observed that the antithetic-variate algorithm is very accurate in estimating the probability.