Latin hypercube sampling technique

The Latin hypercube sampling (LHS) technique is a special method under the umbrella of stratified sampling that selects random samples of each random variable over its range in a stratified manner. Consider a multiple integral involving K random variables

G = g (x) fx (x) d x = E [g (X)] (6.91)

J xeE

where X = (X1, X 2,…, XK )t is an K-dimensional vector of random variables, and fx(x) is their joint PDF.

The LHS technique divides the plausible range of each random variable into M(M > K in practice) equal-probability intervals. Within each interval, a single random variate is generated resulting in M random variates for each random variable. The expected value of g(X), then, is estimated as

1 M

G = g( X 1m, X 2m, …, XKm ) (6.92)

m=1

where Xkm is the variate generated for the kth random variable Xk in the mth set.

More specifically, consider a random variable Xk over the interval of [xk, xk ] following a specified PDF fk(xk). The range [xk, xk] is partitioned into M intervals, that is,

xk — xk0 < xk1 < xk2 < ”’ < xk, M—1 < xkM — xk (6.93)

in which P (xkm < Xk < xk, m+1) = 1/M for all m = 0, 1, 2,…, M — 1. The end points of the intervals are determined by solving

|

/ |

xkm m

fk (xk) dxk = m (6.94)

-k

where Fk ( ) is the CDF of the random variable Xk. The LHS technique, once the end points for all intervals are determined, randomly selects a single value

in each of the intervals to form the M samples set for Xk. The sample values can be obtained by the CDF-inverse or other appropriate method.

To generate M values of random variable Xk from each of the intervals, a sequence of probability values {pk1, pk2,…, pk, M-1, pkM} is generated as

m — 1

Pkm = M + Zkm m = 1,2,…, M (6.95)

in which {zk1, Zk2,…, Zk, M-1, ZkM} are independent uniform random numbers from Z ~ U(0, 1/M). After {pk1, pk2,…, pk, M-1, pkM} are generated, the corresponding M random samples for Xk can be determined as

Xkm = F-l( pkm) m = 1,2,…, M (6.96)

Note that pkm determined by Eq. (6.96) follows

pk1 < pk2 < ••• < pkm < ••• < pk, M-1 < pkM (6.97)

and accordingly,

xk1 — xk2 — — xkm — ‘ — xk, M-1 — xkM (6.98)

To make the generated {xk1, xk2,…, xk, M-1, xkM} a random sequence, random permutation can be applied to randomize the sequence. Alternatively, Latin hypercube samples for K random variables with size M can be generated by (Pebesma and Heuvelink, 1999), that is,

xkm = Fj-1^Skm MUkm ) (6.99)

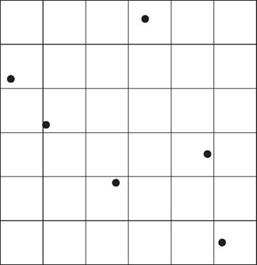

where skm is a random permutation of 1 to M, and ukm is a uniformly distributed random variate in [0, 1]. Figure 6.14 shows the allocation of six samples by the LHS technique for a problem involving two random variables. It is seen that in each row or column of the 6 x 6 matrix only one cell contains a generated sample. The LHS algorithm can implemented as follows:

1. Select the number of subintervals M for each random variable, and divide the plausible range into M equal-probability intervals according to Eq. (6.94).

2. Generate M standard uniform random variates from U(0, 1/M).

3. Determine a sequence of probability values pkm, for k = 1, 2,…, K; m = 1,2,…, M, using Eq. (6.95).

4. Generate random variates for each of the random variables using an appropriate method, such as Eq. (6.96).

5. Randomly permutate generated random sequences for all random variables.

6. Estimate G by Eq. (6.92).

Using the LHS technique, the usual estimators of G and its distribution function are unbiased (McKay, 1988). Moreover, when the function g(X) is

x21 x20

![]()

![]()

![]()

![]()

monotonic in each of the Xk, the variances of the estimators are no more than and often less than the variances when random variables are generated from simple random sampling. McKay (1988) suggested that the use of twice the number of involved random variables for sample size (M > 2K) would be sufficient to yield accurate estimation of the statistics model output. Iman and Helton (1985) indicated that a choice of M equal to 4/3K usually gives satisfactory results. For a dynamic stream water-quality model over a 1-year simulation period, Manache (2001) compared results from LHS using M = 4/3K and M = 3K and found reasonable convergence in the identification of the most sensitive parameters but not in calculation of the standard deviation of model output. Thus, if it is computationally feasible, the generation of a larger number of samples would further enhance the accuracy of the estimation. Like all other variance-reduction Monte Carlo techniques, LHS generally would require fewer samples or model evaluations to achieve an accuracy level comparable with that obtained from a simple random sampling scheme. In hydrosystems engineering, the LHS technique has been applied widely to sediment transport (Yeh and Tung, 1993; Chang et al., 1993), water-quality modeling (Jaffe and Ferrara, 1984; Melching and Bauwens, 2001; Sohrabi et al., 2003; Manache and Melching, 2004), and rainfall-runoff modeling (Melching, 1995; Yu et al., 2001; Christiaens and Feyen, 2002; Lu and Tung, 2003).

monotonic in each of the Xk, the variances of the estimators are no more than and often less than the variances when random variables are generated from simple random sampling. McKay (1988) suggested that the use of twice the number of involved random variables for sample size (M > 2K) would be sufficient to yield accurate estimation of the statistics model output. Iman and Helton (1985) indicated that a choice of M equal to 4/3K usually gives satisfactory results. For a dynamic stream water-quality model over a 1-year simulation period, Manache (2001) compared results from LHS using M = 4/3K and M = 3K and found reasonable convergence in the identification of the most sensitive parameters but not in calculation of the standard deviation of model output. Thus, if it is computationally feasible, the generation of a larger number of samples would further enhance the accuracy of the estimation. Like all other variance-reduction Monte Carlo techniques, LHS generally would require fewer samples or model evaluations to achieve an accuracy level comparable with that obtained from a simple random sampling scheme. In hydrosystems engineering, the LHS technique has been applied widely to sediment transport (Yeh and Tung, 1993; Chang et al., 1993), water-quality modeling (Jaffe and Ferrara, 1984; Melching and Bauwens, 2001; Sohrabi et al., 2003; Manache and Melching, 2004), and rainfall-runoff modeling (Melching, 1995; Yu et al., 2001; Christiaens and Feyen, 2002; Lu and Tung, 2003).

Melching (1995) compared the results from LHS with M = 50 with those from Monte Carlo simulation with 10,000 simulations and also with those from FOVE and Rosenbleuth’s method for the case of using HEC-1 (U. S. Army Corps

of Engineers, 1991) to estimate flood peaks for a watershed in Illinois. All methods yielded similar estimates of the mean value of the predicted peak flow. The variation of standard deviation estimates among the methods was much greater than that of the mean value estimates. In the estimation of the standard deviation of the peak flow, LHS was found to provide the closest agreement to Monte Carlo simulation, with an average error of 7.5 percent and 10 of 16 standard deviations within 10 percent of the value estimated with Monte Carlo simulation. This indicates that LHS can yield relatively accurate estimates of the mean and standard deviation of model output at a far smaller computational burden than Monte Carlo simulation. A detailed description of LHS, in conjunction with the regression analysis for uncertainty and sensitivity analysis, can be found elsewhere (Tung and Yen, 2005, Sec. 6.8).

Example 6.14 Referring to Example 6.7, apply the Latin hypercube sampling technique to evaluate the pump failure probability in the time interval [0, 200 h].

Solution Again, the uniform distribution U(0, 200) is selected along with the sample- mean Monte Carlo method for carrying out the integration. In Latin hypercube sampling, the interval [0, 200] is divided into 1000 equal-probability subintervals, with each having a probability of 0.001. For U(0, 200), the end points of each subinterval can be obtained easily as

t0 = 0 t1 = °-2, t2 = °-4, ■ ■■ , £999 = 199.8, t1000 = 200

By the LHS, one random variate for each subinterval is generated. In other words, generate a single random variate from

Um ~ U[0.2(m — 1), 0.2m] m = 1, 2,…, 1000

The algorithm for estimating the pump failure probability involves the following steps:

1. Initialize the subinterval index m = 0.

2. Let m = m + 1. Generate one standard uniform random variate um, and transform

it into the random variate from the corresponding subinterval by tm = 0.2(m — 1) +

um.

3. If m < 1000, go to step 2; otherwise, compute the pump failure probability as

1 1000

pf = 1000 £ ft(tm)

m=1

and the associated standard deviation as

__ sm

Spf = V1000

with sm representing the standard deviation of 1000 computed function values

ft ™.

The results from the numerical simulation are

The 95 percent confidence interval is (0.14743, 0.14828). The value of pf is extremely close to the exact solution of 0.147856, and only 1000 simulations were used.

Leave a reply